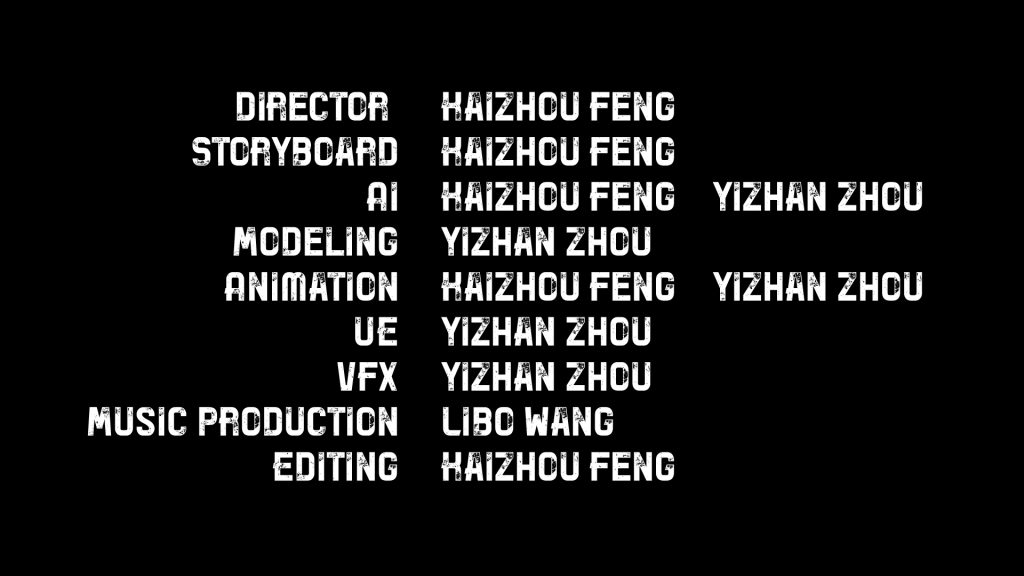

2024/03/11 19:20

Final Edition

//

//

//

2024/03/09 17:21

UE5

Flame test in Unreal Engine5

*model from internet

//

//

//

2024/03/03 17:45

X-particles

Water simulation

We utilized the plugin named X-particles to produce the water simulation.

and rendered sequences in c4d.

2024/03/01 19:50

C4D

Modeling

We created a sphere as a planet to do the explosion, and did three shots of movement between moon and earth

//

//

//

2024/02/28 13:02

UE5

Water simulation

We used the fluid live plugin in Unreal Engine to simulate water

//

//

//

2024/02/25 15:55

All of AI-generated clips

2024/02/16 16:51

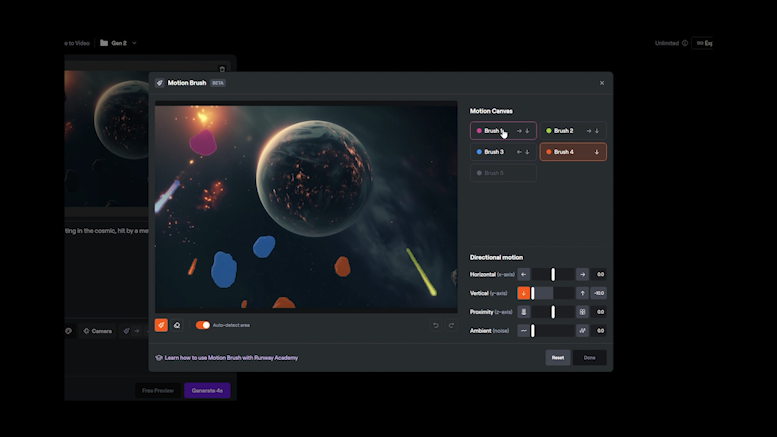

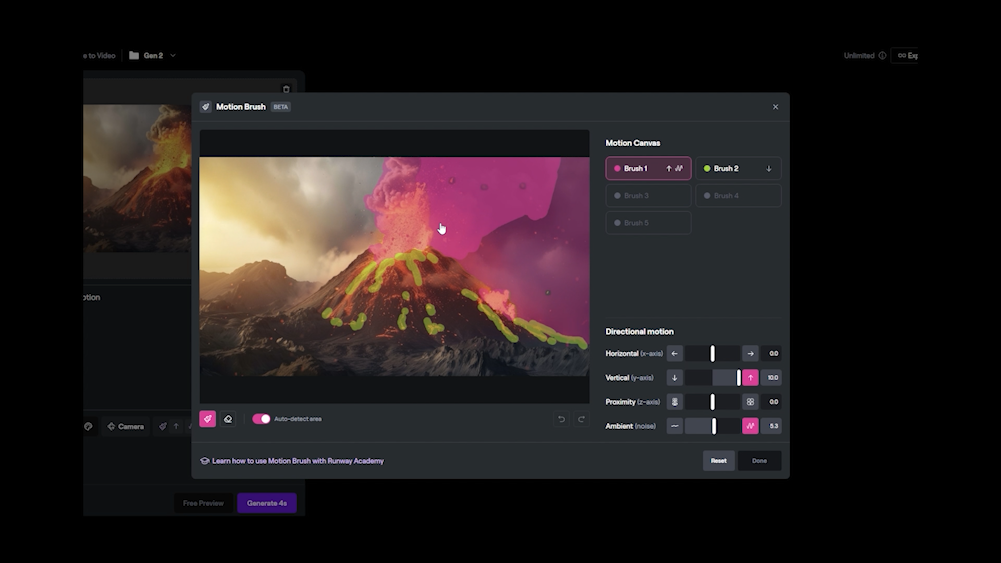

shot-A meteor hits the early staged earth

shot-Tsunami hits the cites, people drown in the water.

2024/02/11 20:15

Runway

AI test

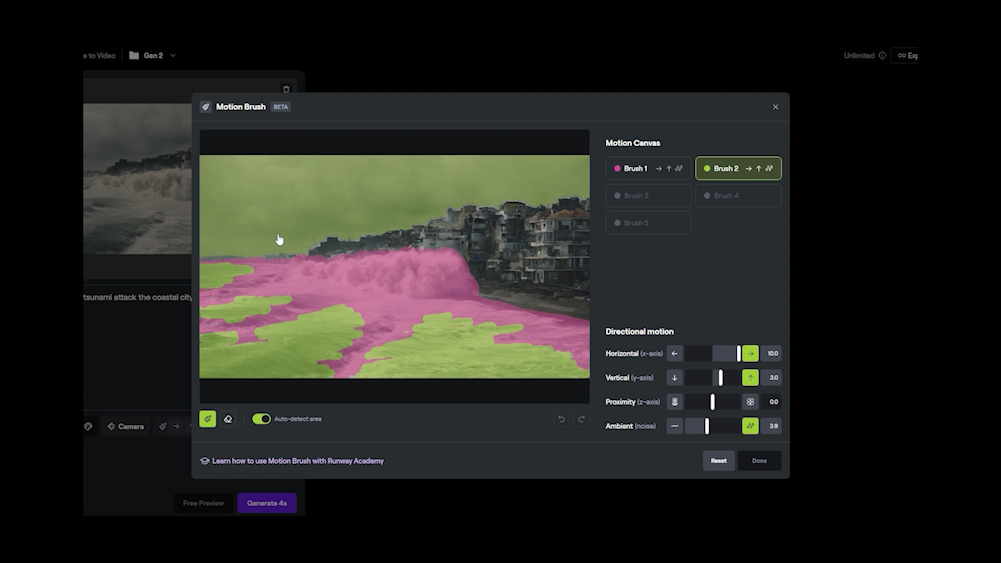

shot- volcano erupts

Shot- The atmosphere is gone

//

//

//

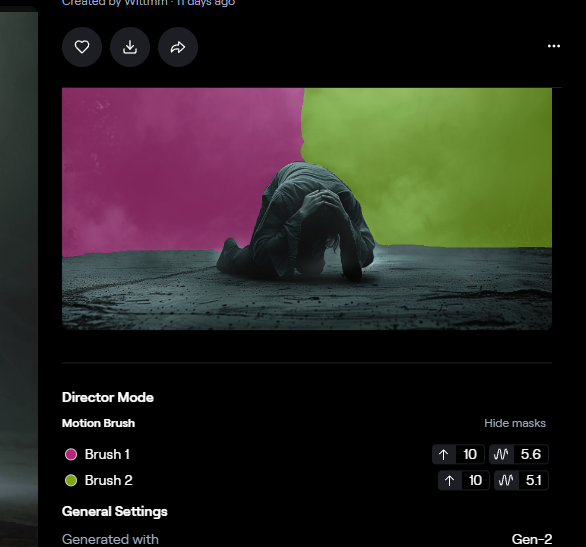

2024/02/08/16:55

Then we turned to Runway, where we could navigate what’s in the frame. We controlled the direction and how much we wanted the objects to move by brushing different areas in the frame.

Just so you know we did not take a short cut. There are over 300 shots generated in Runway based on our own words and the pictures generated in Mid journey. We chose them very carefully to make the final edition exactly the way we wanted.

AI-tool test:

Runway:

clip1- volcano erupts

clip2-people burn in the lava and fire

//

//

//

2024/02/06 15:23

AI-tool test:

We used Midjourney to create some imagines

AI-tool test:

When we first came up with the story, we thought we couldn’t handle all the VFX in the film. AI seemed to be the perfect tool to do the water and fire. Plus we’ve wanted to try AI since we saw some good quality short films on the internet that were generated by AI. Actually, AI wasn’t as intelligent as we thought. We tried one called Pika first, but we barely had control over it, it just generated random footage.

PIKA

It is hard to costomise some elements

clip1

clip2

clip3

//

//

//

2024/01/31 17:30

- Experimental Film

2. Brief: UNESCO

3. member

Kaizhou Feng, 3D Computer Animation. k.feng0320231@arts.ac.uk

Yizhan Zhou, 3D Computer Animation. y.zhou0320239@arts.ac.uk

Libo Wang, Music Production. l.wang0220233@arts.ac.uk

4. Idea

We are gonna list a few facts that help stabilize the environment and make this planet habitable.

Such as, the relationship between the moon and the earth gives a gravity that controls the tide and the ocean.

The earth spins in a rather slow speed so it has stable days, nights and seasons.

The magnetic field is stable so there is enough air gathered around the planet for creatures to breath.

Amino acid forms into DNA, which is super random and complicated for lives to be born.

5. Goal

We want to show what a disaster it’s gonna be if any of these factors are changed.

We shouldn’t take those facts for granted, there are so many strict elements for lives to be born and live in a suitable environment.

Get people to realize how lucky we are to have this habitable environment, and we need to cherish it.

6. Technique

We render some footage with traditional software like Unreal Engine and Maya, and use some AI generated footage. Edit them with some music and voice over.

7. Final Outcome

There will be an experimental animated video with music.

//

//

//